Komodor

Port's Komodor integration allows you to model Komodor resources in your software catalog and ingest data into them.

Overview

This integration allows you to map, organize, and sync your desired Komodor resources and their metadata in Port.

Supported Resources

The resources that can be ingested from Komodor into Port are listed below:

Prerequisites

Generate a Komodor Api Key

- Log in to the Komodor platfrom.

- Access API Keys management page:

- Click on your user profile in the top-right corner of the platform.

- Select

API Keysfrom the dropdown menu.

- Generate a new API key:

- Click the

Generate Keybutton. - Provide a descriptive name for the API key to help you identify its purpose later (e.g., "Port.io api key").

- Click the

- Copy the token and save it in a secure location.

To read more, see the Komodor documentation.

Make sure the user who creates the API key has view permissions (ideally full access) for the resources you wish to ingest into Port, since the API key inherits the user's permissions.

Setup

Choose one of the following installation methods:

- Hosted by Port

- Real-time (self-hosted)

- Scheduled (CI)

Using this installation option means that the integration will be hosted by Port, with a customizable resync interval to ingest data into Port.

Live event support

Currently, live events are not supported for this integration.

Resyncs will be performed periodically (with a configurable interval), or manually triggered by you via Port's UI.

Therefore, real-time events (including GitOps) will not be ingested into Port immediately.

Live events support for this integration is WIP and will be supported in the near future.

Alternatively, you can install the integration using the Real-time (self-hosted) method to update Port in real time using webhooks.

Installation

To install, follow these steps:

-

Go to the Data sources page of your portal.

-

Click on the

+ Data sourcebutton in the top-right corner. -

Click on the relevant integration in the list.

-

Under

Select your installation method, chooseHosted by Port. -

Configure the

integration settingsandapplication settingsas you wish (see below for details).

Application settings

Every integration hosted by Port has the following customizable application settings, which are configurable after installation:

-

Resync interval: The frequency at which Port will ingest data from the integration. There are various options available, ranging from every 1 hour to once a day. -

Send raw data examples: A boolean toggle (enabledby default). If enabled, raw data examples will be sent from the integration to Port. These examples are used when testing your mapping configuration, they allow you to run yourjqexpressions against real data and see the results.

Integration settings

Every integration has its own tool-specific settings, under the Integration settings section.

Each of these settings has an ⓘ icon next to it, which you can hover over to see a description of the setting.

Port secrets

Some integration settings require sensitive pieces of data, such as tokens.

For these settings, Port secrets will be used, ensuring that your sensitive data is encrypted and secure.

When filling in such a setting, its value will be obscured (shown as ••••••••).

For each such setting, Port will automatically create a secret in your organization.

To see all secrets in your organization, follow these steps.

Limitations

- The maximum time for a full sync to run is based on the configured resync interval. For very large amounts of data where a resync operation is expected to take longer, please use a longer interval.

Port source IP addresses

When using this installation method, Port will make outbound calls to your 3rd-party applications from static IP addresses.

You may need to add these addresses to your allowlist, in order to allow Port to interact with the integrated service:

- Europe (EU)

- United States (US)

54.73.167.226

63.33.143.237

54.76.185.219

3.234.37.33

54.225.172.136

3.225.234.99

Using this installation method means that the integration will be able to update Port in real time.

Prerequisites

To install the integration, you need a Kubernetes cluster that the integration's container chart will be deployed to.

Please make sure that you have kubectl and helm installed on your machine, and that your kubectl CLI is connected to the Kubernetes cluster where you plan to install the integration.

If you are having trouble installing this integration, please refer to these troubleshooting steps.

For details about the available parameters for the installation, see the table below.

- Helm

- ArgoCD

To install the integration using Helm:

-

Go to the Komodor data source page in your portal.

-

Select the

Real-time and always onmethod:

-

A

helmcommand will be displayed, with default values already filled out (e.g. your Port client ID, client secret, etc).

Copy the command, replace the placeholders with your values, then run it in your terminal to install the integration.

The baseUrl, port_region, port.baseUrl, portBaseUrl, port_base_url and OCEAN__PORT__BASE_URL parameters are used to select which instance or Port API will be used.

Port exposes two API instances, one for the EU region of Port, and one for the US region of Port.

- If you use the EU region of Port (https://app.getport.io), your API URL is

https://api.getport.io. - If you use the US region of Port (https://app.us.getport.io), your API URL is

https://api.us.getport.io.

To install the integration using ArgoCD:

- Create a

values.yamlfile inargocd/my-ocean-komodor-integrationin your git repository with the content:

Remember to replace the placeholders for KOMODOR_TOKEN.

initializePortResources: true

scheduledResyncInterval: 120

integration:

identifier: my-ocean-komodor-integration

type: komodor

eventListener:

type: POLLING

secrets:

komodorApiKey: KOMODOR_API_KEY

- Install the

my-ocean-komodor-integrationArgoCD Application by creating the followingmy-ocean-komodor-integration.yamlmanifest:

Remember to replace the placeholders for YOUR_PORT_CLIENT_ID YOUR_PORT_CLIENT_SECRET and YOUR_GIT_REPO_URL.

Multiple sources ArgoCD documentation can be found here.

ArgoCD Application

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: my-ocean-komodor-integration

namespace: argocd

spec:

destination:

namespace: my-ocean-komodor-integration

server: https://kubernetes.default.svc

project: default

sources:

- repoURL: 'https://port-labs.github.io/helm-charts/'

chart: port-ocean

targetRevision: 0.1.14

helm:

valueFiles:

- $values/argocd/my-ocean-komodor-integration/values.yaml

parameters:

- name: port.clientId

value: YOUR_PORT_CLIENT_ID

- name: port.clientSecret

value: YOUR_PORT_CLIENT_SECRET

- name: port.baseUrl

value: https://api.getport.io

- repoURL: YOUR_GIT_REPO_URL

targetRevision: main

ref: values

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

The baseUrl, port_region, port.baseUrl, portBaseUrl, port_base_url and OCEAN__PORT__BASE_URL parameters are used to select which instance or Port API will be used.

Port exposes two API instances, one for the EU region of Port, and one for the US region of Port.

- If you use the EU region of Port (https://app.getport.io), your API URL is

https://api.getport.io. - If you use the US region of Port (https://app.us.getport.io), your API URL is

https://api.us.getport.io.

- Apply your application manifest with

kubectl:

kubectl apply -f my-ocean-komodor-integration.yaml

This table summarizes the available parameters for the installation. Note the parameters specific to this integration, they are last in the table.

| Parameter | Description | Required |

|---|---|---|

port.clientId | Your port client id | ✅ |

port.clientSecret | Your port client secret | ✅ |

port.baseUrl | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ |

integration.identifier | Change the identifier to describe your integration | ✅ |

integration.type | The integration type | ✅ |

integration.eventListener.type | The event listener type | ✅ |

scheduledResyncInterval | The number of minutes between each resync | ❌ |

initializePortResources | Default true, When set to true the integration will create default blueprints and the port App config Mapping | ❌ |

integration.secrets.komodorApiKey | The Komodor API key token. | ✅ |

For advanced configuration such as proxies or self-signed certificates, click here.

Event listener

The integration uses polling to pull the configuration from Port every minute and check it for changes. If there is a change, a resync will occur.

This workflow/pipeline will run the Komodor integration once and then exit, this is useful for scheduled ingestion of data.

If you want the integration to update Port in real time you should use the Real-time (self-hosted) installation option

- GitHub

- Jenkins

- Azure Devops

- GitLab

Make sure to configure the following Github Secrets:

| Parameter | Description | Required |

|---|---|---|

OCEAN__PORT__CLIENT_ID | Your port client id | ✅ |

OCEAN__PORT__CLIENT_SECRET | Your port client secret | ✅ |

OCEAN__PORT__BASE_URL | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ |

OCEAN__INTEGRATION__CONFIG__KOMODOR_API_KEY | The Komodor API token. | ✅ |

OCEAN__INTEGRATION__IDENTIFIER | Change the identifier to describe your integration, if not set will use the default one | ❌ |

OCEAN__INITIALIZE_PORT_RESOURCES | Default true, When set to false the integration will not create default blueprints and the port App config Mapping | ❌ |

Here is an example for komodor-integration.yml workflow file:

name: Komodor Exporter Workflow

on:

workflow_dispatch:

schedule:

- cron: '0 */1 * * *' # Determines the scheduled interval for this workflow. This example runs every hour.

jobs:

run-integration:

runs-on: ubuntu-latest

timeout-minutes: 30 # Set a time limit for the job

steps:

- uses: port-labs/ocean-sail@v1

with:

type: 'komodor'

port_client_id: ${{ secrets.OCEAN__PORT__CLIENT_ID }}

port_client_secret: ${{ secrets.OCEAN__PORT__CLIENT_SECRET }}

port_base_url: https://api.getport.io

config: |

komodor_token: ${{ secrets.OCEAN__INTEGRATION__CONFIG__KOMODOR_TOKEN }}

Your Jenkins agent should be able to run docker commands.

Make sure to configure the following Jenkins Credentials of Secret Text type:

| Parameter | Description | Required |

|---|---|---|

OCEAN__PORT__CLIENT_ID | Your port client id | ✅ |

OCEAN__PORT__CLIENT_SECRET | Your port client secret | ✅ |

OCEAN__PORT__BASE_URL | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ |

OCEAN__INTEGRATION__CONFIG__KOMODOR_API_KEY | The Komodor API token. | ✅ |

OCEAN__INTEGRATION__IDENTIFIER | Change the identifier to describe your integration, if not set will use the default one | ❌ |

OCEAN__INITIALIZE_PORT_RESOURCES | Default true, When set to false the integration will not create default blueprints and the port App config Mapping | ❌ |

Here is an example for Jenkinsfile groovy pipeline file:

pipeline {

agent any

stages {

stage('Run Komodor Integration') {

steps {

script {

withCredentials([

string(credentialsId: 'OCEAN__INTEGRATION__CONFIG__KOMODOR_TOKEN', variable: 'OCEAN__INTEGRATION__CONFIG__KOMODOR_TOKEN'),

string(credentialsId: 'OCEAN__PORT__CLIENT_ID', variable: 'OCEAN__PORT__CLIENT_ID'),

string(credentialsId: 'OCEAN__PORT__CLIENT_SECRET', variable: 'OCEAN__PORT__CLIENT_SECRET'),

]) {

sh('''

#Set Docker image and run the container

integration_type="komodor"

version="latest"

image_name="ghcr.io/port-labs/port-ocean-${integration_type}:${version}"

docker run -i --rm --platform=linux/amd64 \

-e OCEAN__EVENT_LISTENER='{"type":"ONCE"}' \

-e OCEAN__INITIALIZE_PORT_RESOURCES=true \

-e OCEAN__INTEGRATION__CONFIG__KOMODOR_TOKEN=$OCEAN__INTEGRATION__CONFIG__KOMODOR_TOKEN \

-e OCEAN__PORT__CLIENT_ID=$OCEAN__PORT__CLIENT_ID \

-e OCEAN__PORT__CLIENT_SECRET=$OCEAN__PORT__CLIENT_SECRET \

-e OCEAN__PORT__BASE_URL='https://api.getport.io' \

$image_name

exit $?

''')

}

}

}

}

}

}

Your Azure Devops agent should be able to run docker commands. Learn more about agents here.

Variable groups store values and secrets you'll use in your pipelines across your project. Learn more

Setting Up Your Credentials

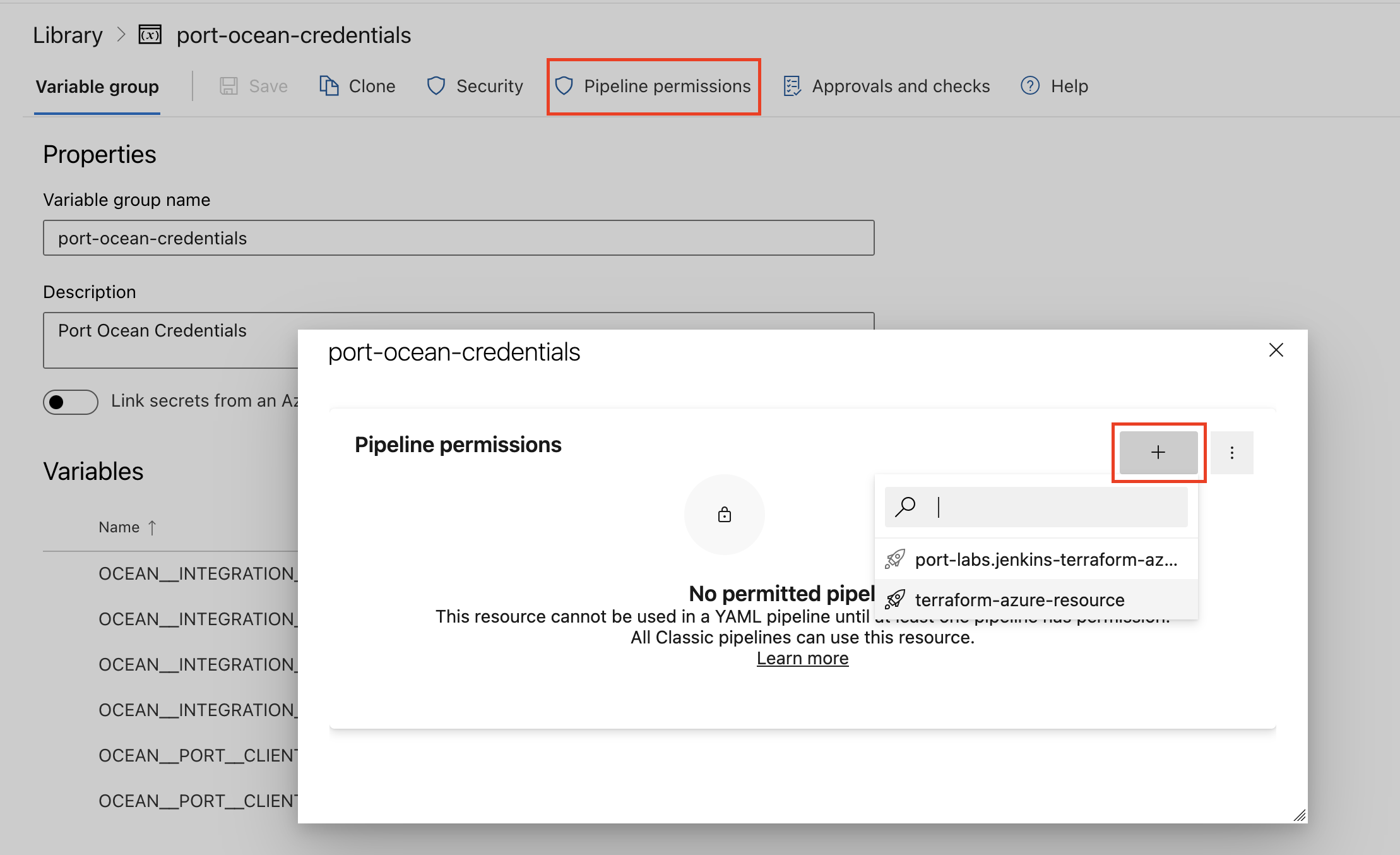

- Create a Variable Group: Name it port-ocean-credentials.

- Store the required variables (see the table below).

- Authorize Your Pipeline:

- Go to "Library" -> "Variable groups."

- Find port-ocean-credentials and click on it.

- Select "Pipeline Permissions" and add your pipeline to the authorized list.

| Parameter | Description | Required |

|---|---|---|

OCEAN__PORT__CLIENT_ID | Your port client id | ✅ |

OCEAN__PORT__CLIENT_SECRET | Your port client secret | ✅ |

OCEAN__PORT__BASE_URL | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ |

OCEAN__INTEGRATION__CONFIG__KOMODOR_API_KEY | The Komodor API token. | ✅ |

OCEAN__INTEGRATION__IDENTIFIER | Change the identifier to describe your integration, if not set will use the default one | ❌ |

OCEAN__INITIALIZE_PORT_RESOURCES | Default true, When set to false the integration will not create default blueprints and the port App config Mapping | ❌ |

Here is an example for komodor-integration.yml pipeline file:

trigger:

- main

pool:

vmImage: "ubuntu-latest"

variables:

- group: port-ocean-credentials

steps:

- script: |

# Set Docker image and run the container

integration_type="komodor"

version="latest"

image_name="ghcr.io/port-labs/port-ocean-$integration_type:$version"

docker run -i --rm \

-e OCEAN__EVENT_LISTENER='{"type":"ONCE"}' \

-e OCEAN__INITIALIZE_PORT_RESOURCES=true \

-e OCEAN__INTEGRATION__CONFIG__KOMODOR_TOKEN=$(OCEAN__INTEGRATION__CONFIG__KOMODOR_TOKEN) \

-e OCEAN__PORT__CLIENT_ID=$(OCEAN__PORT__CLIENT_ID) \

-e OCEAN__PORT__CLIENT_SECRET=$(OCEAN__PORT__CLIENT_SECRET) \

-e OCEAN__PORT__BASE_URL='https://api.getport.io' \

$image_name

exit $?

displayName: 'Ingest Data into Port'

Make sure to configure the following GitLab variables:

| Parameter | Description | Required |

|---|---|---|

OCEAN__PORT__CLIENT_ID | Your port client id | ✅ |

OCEAN__PORT__CLIENT_SECRET | Your port client secret | ✅ |

OCEAN__PORT__BASE_URL | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ |

OCEAN__INTEGRATION__CONFIG__KOMODOR_API_KEY | The Komodor API token. | ✅ |

OCEAN__INTEGRATION__IDENTIFIER | Change the identifier to describe your integration, if not set will use the default one | ❌ |

OCEAN__INITIALIZE_PORT_RESOURCES | Default true, When set to false the integration will not create default blueprints and the port App config Mapping | ❌ |

Here is an example for .gitlab-ci.yml pipeline file:

default:

image: docker:24.0.5

services:

- docker:24.0.5-dind

before_script:

- docker info

variables:

INTEGRATION_TYPE: komodor

VERSION: latest

stages:

- ingest

ingest_data:

stage: ingest

variables:

IMAGE_NAME: ghcr.io/port-labs/port-ocean-$INTEGRATION_TYPE:$VERSION

script:

- |

docker run -i --rm --platform=linux/amd64 \

-e OCEAN__EVENT_LISTENER='{"type":"ONCE"}' \

-e OCEAN__INITIALIZE_PORT_RESOURCES=true \

-e OCEAN__INTEGRATION__CONFIG__KOMODOR_TOKEN=$OCEAN__INTEGRATION__CONFIG__KOMODOR_TOKEN \

-e OCEAN__PORT__CLIENT_ID=$OCEAN__PORT__CLIENT_ID \

-e OCEAN__PORT__CLIENT_SECRET=$OCEAN__PORT__CLIENT_SECRET \

-e OCEAN__PORT__BASE_URL='https://api.getport.io' \

$IMAGE_NAME

rules: # Run only when changes are made to the main branch

- if: '$CI_COMMIT_BRANCH == "main"'

The baseUrl, port_region, port.baseUrl, portBaseUrl, port_base_url and OCEAN__PORT__BASE_URL parameters are used to select which instance or Port API will be used.

Port exposes two API instances, one for the EU region of Port, and one for the US region of Port.

- If you use the EU region of Port (https://app.getport.io), your API URL is

https://api.getport.io. - If you use the US region of Port (https://app.us.getport.io), your API URL is

https://api.us.getport.io.

For advanced configuration such as proxies or self-signed certificates, click here.

Configuration

Port integrations use a YAML mapping block to ingest data from Komodor's API into Port.

The mapping makes use of the JQ JSON processor to select, modify, concatenate, transform and perform other operations on existing fields and values from the integration API.

Examples

Examples of blueprints and the relevant integration configurations:

Services

Service Blueprint

{

"identifier": "komodorService",

"title": "Komodor Service",

"icon": "Komodor",

"schema": {

"properties": {

"status": {

"type": "string",

"title": "Status",

"enum": [

"healthy",

"unhealthy"

],

"enumColors": {

"healthy": "green",

"unhealthy": "red"

}

},

"cluster_name": {

"icon": "Cluster",

"type": "string",

"title": "Cluster"

},

"workload_kind": {

"icon": "Deployment",

"type": "string",

"title": "Kind"

},

"service_name": {

"icon": "DefaultProperty",

"type": "string",

"title": "Service"

},

"namespace_name": {

"icon": "Environment",

"type": "string",

"title": "Namespace"

},

"last_deploy_at": {

"type": "string",

"title": "Last Deploy At",

"format": "date-time"

},

"komodor_link": {

"type": "string",

"title": "Komodor Link",

"format": "url",

"icon": "LinkOut"

},

"labels": {

"icon": "JsonEditor",

"type": "object",

"title": "Labels"

}

},

"required": []

},

"mirrorProperties": {},

"calculationProperties": {},

"aggregationProperties": {},

"relations": {}

}

Integration configuration

deleteDependentEntities: true

createMissingRelatedEntities: false

enableMergeEntity: true

resources:

- kind: komodorService

selector:

query: 'true'

port:

entity:

mappings:

identifier: .uid

title: .service

blueprint: '"komodorService"'

properties:

service_id: .uid

status: .status

cluster_name: .cluster

workload_kind: .kind

namespace_name: .namespace

service_name: .service

komodor_link: .link + "&utmSource=port"

labels: .labels

last_deploy_at: .lastDeploy.endTime | todate

last_deploy_status: .lastDeploy.status

Health Monitors

Health Monitor blueprint

{

"identifier": "komodorHealthMonitoring",

"title": "Komodor Health Monitoring",

"icon": "Komodor",

"schema": {

"properties": {

"supporting_data": {

"icon": "JsonEditor",

"type": "object",

"title": "Supporting Data"

},

"komodor_link": {

"icon": "LinkOut",

"type": "string",

"title": "Komodor Link",

"format": "url"

},

"severity": {

"type": "string",

"title": "Severity",

"enum": [

"high",

"medium",

"low"

],

"enumColors": {

"high": "red",

"medium": "orange",

"low": "yellow"

}

},

"created_at": {

"type": "string",

"title": "Created at",

"format": "date-time"

},

"last_evaluated_at": {

"icon": "Clock",

"type": "string",

"title": "Last Evaluated At",

"format": "date-time"

},

"check_type": {

"type": "string",

"title": "Check Type"

},

"status": {

"type": "string",

"title": "Status",

"enum": [

"open",

"confirmed",

"resolved",

"dismissed",

"ignored",

"manually_resolved"

],

"enumColors": {

"open": "red",

"confirmed": "turquoise",

"resolved": "green",

"dismissed": "purple",

"ignored": "darkGray",

"manually_resolved": "bronze"

}

}

},

"required": []

},

"mirrorProperties": {},

"calculationProperties": {},

"aggregationProperties": {},

"relations": {

"service": {

"title": "Service",

"target": "komodorService",

"required": false,

"many": false

}

}

}

Integration configuration

deleteDependentEntities: true

createMissingRelatedEntities: false

enableMergeEntity: true

resources:

- kind: komodorHealthMonitoring

selector:

query: 'true'

port:

entity:

mappings:

identifier: .id

title: .komodorUid | gsub("\\|"; "-") | sub("-+$"; "")

blueprint: '"komodorHealthMonitoring"'

properties:

status: .status

resource_identifier: .komodorUid | gsub("\\|"; "-") | sub("-+$"; "")

severity: .severity

supporting_data: .supportingData

komodor_link: .link + "&utmSource=port"

created_at: .createdAt | todate

last_evaluated_at: .lastEvaluatedAt | todate

check_type: .checkType

workload_type: .komodorUid | split("|") | .[0]

cluster_name: .komodorUid | split("|") | .[1]

namespace_name: .komodorUid | split("|") | .[2]

workload_name: .komodorUid | split("|") | .[3]

- kind: komodorHealthMonitoring

selector:

query: (.komodorUid | split("|") | length) == 4

port:

entity:

mappings:

identifier: .id

title: .komodorUid | gsub("\\|"; "-") | sub("-+$"; "")

blueprint: '"komodorHealthMonitoring"'

properties: {}

relations:

service: .komodorUid | gsub("\\|"; "-")

Let's Test It

This section includes a sample response data from Komodor. In addition, it includes the entity created from the resync event based on the Ocean configuration provided in the previous section.

Payload

Here is an example of the payload structure from Komodor. All variables are written in uppercase letters for improved readability:

Service response data

{

"data": {

"services": [

{

"annotations": {

"checksum/config": "CHECKSUM",

"deployment.kubernetes.io/revision": "1",

"meta.helm.sh/release-name": "komodor-agent",

"meta.helm.sh/release-namespace": "komodor"

},

"cluster": "test",

"kind": "Deployment",

"labels": {

"app.kubernetes.io/instance": "komodor-agent",

"app.kubernetes.io/managed-by": "Helm",

"app.kubernetes.io/name": "komodor-agent",

"app.kubernetes.io/version": "X.X.X",

"helm.sh/chart": "komodor-agent-X.X.X"

},

"lastDeploy": {

"endTime": 1740140297,

"startTime": 1740140297,

"status": "success"

},

"link": "https://app.komodor.com/services/ACCOUNT.CLUSTER.SERVICE?workspaceId=null&referer=public-api",

"namespace": "komodor",

"service": "komodor-agent",

"status": "healthy",

"uid": "INTERNAL_KOMODOR_UID"

}

]

},

"meta": {

"nextPage": 1,

"page": 0,

"pageSize": 1

}

}

Health Monitor response data

{

"checkType": "restartingContainers",

"createdAt": 1742447493,

"id": "RANDOM_UID",

"komodorUid": "WORKLOAD_KIND|CLUSTER_NAME|NAMESPACE_NAME|WORKLOAD_NAME",

"lastEvaluatedAt": 1743292800,

"link": "https://app.komodor.com/health/risks/drawer?checkCategory=workload-health&checkType=restartingContainers&violationId=78f44264-dbe1-4d0f-9096-9925f5e74ae8",

"severity": "medium",

"status": "open",

"supportingData": {

"restartingContainers": {

"containers": [

{

"name": "CONTAINER_NAME",

"restarts": 969

}

],

"restartReasons": {

"breakdown": [

{

"message": "Container Exited With Error - Exit Code: 1",

"percent": 100,

"numOccurences": 1825,

"reason": "ProcessExit"

}

],

"additionalInfo": {

"podSamples": [

{

"podName": "POD_NAME_1",

"restarts": 607

},

{

"podName": "POD_NAME_2",

"restarts": 170

},

{

"podName": "POD_NAME_3",

"restarts": 57

},

{

"podName": "POD_NAME_4",

"restarts": 53

},

{

"podName": "POD_NAME_5",

"restarts": 22

}

],

"numRestartsOnTimeseries": 909,

"numRestartsOnDB": 1825

}

}

}

}

}

Mapping Result

The combination of the sample payload and the Ocean configuration generates the following Port entities:

Service entity in Port

{

"identifier": "SERVICE_ID",

"title": "komodor-agent",

"blueprint": "komodorService",

"properties": {

"serviceId": "KOMODOR_INTERNAL_ID",

"status": "healthy",

"cluster_name": "test",

"workload_kind": "Deployment",

"namespace_name": "komodor",

"service_name": "komodor-agent",

"link_to_komodor": "https://app.komodor.com/services/ACCOUNT_NAME.CLUSTER.SERVICE?workspaceId=null&referer=public-api",

"labels": {

"app.kubernetes.io/instance": "komodor-agent",

"app.kubernetes.io/managed-by": "Helm",

"app.kubernetes.io/name": "komodor-agent",

"app.kubernetes.io/version": "X.X.X",

"helm.sh/chart": "komodor-agent-X.X.X"

},

"last_deploy_at": "2025-01-22T08:26:42Z",

"last_deploy_status": "success"

}

}

Health Monitor entity in Port

{

"identifier": "random-uuid",

"title": "KIND|CLUSTER|NAMESPACE|NAME",

"blueprint": "komodorHealthMonitoring",

"properties": {

"status": "open",

"resource_identifier": "KIND-CLUSTER-NAMESPACE-NAME",

"severity": "medium",

"supporting_data": {

"restartingContainers": {

"containers": [

{

"name": "container-name",

"restarts": 969

}

],

"restartReasons": {

"breakdown": [

{

"message": "Container Exited With Error - Exit Code: 1",

"percent": 100,

"numOccurences": 1825,

"reason": "ProcessExit"

}

],

"additionalInfo": {

"podSamples": [

{

"podName": "POD_NAME_1",

"restarts": 607

},

{

"podName": "POD_NAME_2",

"restarts": 170

},

{

"podName": "POD_NAME_3",

"restarts": 57

},

{

"podName": "POD_NAME_4",

"restarts": 53

},

{

"podName": "POD_NAME_5",

"restarts": 22

}

],

"numRestartsOnTimeseries": 909,

"numRestartsOnDB": 1825

}

}

}

},

"komodor_link": "https://app.komodor.com/health/risks/drawer?checkCategory=workload-health&checkType=restartingContainers&violationId=UID&utmSource=port",

"created_at": "2025-03-20T05:11:33Z",

"last_evaluated_at": "2025-03-30T00:00:00Z",

"check_type": "restartingContainers",

"workload_type": "WORKLOAD_KIND",

"cluster_name": "CLUSTER_NAME",

"namespace_name": "NAMESPACE_NAME",

"workload_name": "NAME"

},

"relations": {

"service": [

"ServiceUID"

]

}

}

Connect Komodor services to k8s workloads

Prerequisites

- Install Komodor integration.

- Install Port's k8s exporter integration on your cluster.

- Install Komodor agent on your cluster.

Create the relation

-

Navigate to the data model page of your portal.

-

Click on the Komodor Service blueprint.

-

Click on the

...button in the top right corner, chooseEdit blueprint, then click on theEdit JSONbutton. -

Update the existing JSON by incorporating the following data in it.

Mapping configuration (Click to expand)

{

"relations": {

"workload": {

"title": "Workload",

"target": "workload",

"required": false,

"many": false

}

}

}

Set up mapping configuration

-

Navigate to the data sources page of your portal.

-

Click on the Komodor integration, and scroll to the mapping section in the bottom-left corner.

-

Copy the following configuration and paste it in the editor, then click

Save & Resync.Mapping configuration (Click to expand)

- kind: komodorService

selector:

query: 'true'

port:

entity:

mappings:

identifier: .kind + "-" + .cluster + "-" + .namespace + "-" + .service

blueprint: '"komodorService"'

properties: {}

relations:

workload: .service + "-" + .kind + "-" + .namespace + "-" + .cluster

This assumes that both your Komodor integration and Kubernetes exporter are using their default key and field values. If any mappings or blueprints have been modified in either integration, you may need to adjust these values accordingly.